Expanded Performance Category

Mediapipe to OSC: camera-based motion tracking for expanded performance

by Tom Mitchell

Gestural control for creative applications and music performance is an area that fascinates me and in this residency I’ve been exploring modern camera-based motion tracking methods. I’m publishing an open-source project called mediapipe2osc which uses Google’s MediaPipe framework to perform 3D skeletal tracking (with a 2D webcam) and streaming the data points via Open Sound Control for easy use in creative and music applications. In this post I discuss the history and recent developments in hand tracking before explaining exactly what mediapipe2osc does. To jump straight to the code, see the repository on github: mediapipe2osc

Traditional musical instruments are difficult to play. Musicians train their bodies to move fluidly and precisely in awkward positions dictated by their instruments. The same is true of the computing interfaces that we use every day, like keyboards and mice, which are designed around the technical capabilities and affordances of their components rather than the most natural ways we might choose to express ourselves. We have so much invested in many of the most abundant computer interfaces that the upside to any alternative would have to be significant before we see any changes. There’s a name for this: ‘Qwerty Syndrome’ – named after the myth that the QWERTY keyboard was designed to reduce typewriting speed and prevent jams.

Gestures are a natural way for us to communicate - it is something we often do subconsciously. However, our gestures are seldom used directly in our interactions with machines because motion capture is still very much an open problem: all of the available technologies have downsides.

Gestural interaction depends on accurately tracking the fine-motor movements of the hands and the associated technologies can broadly separated into two categories: wearable and camera-based approaches.

Wearable technologies generally involve embedding sensors into textiles to create datgloves. The earliest dataglove was created in the 1980s and while the technology has advanced considerably since then, the general principles are the same. Datagloves are challenging to make, it is hard enough to make ordinary gloves that comfortably fit different hands but adding bend sensors, wiring, inertial sensors, vibration motors, LEDs and attaching circuit boards, batteries, enclosures, microcontrollers and wireless transceivers is really hard. On top of all this, gloves should not inhibit the wearer and must be washable. We’ve overcome many of these challenges with the MiMU Gloves

but it is labour intensive and expensive, especially when you are committed to ethical manufacturing standards.

MiMU Glove

Datagloves are ideal for expanded performances and experiences as they become part of a ‘costume’ and are typically only used for short periods. For more frequent applications, datagloves can be inconvenient, as in general people don’t want to wear gloves for extended periods of time. However, there are some exciting and fairly recent developments that could point towards the future of gestural interaction, where the movements of the fingers can be sensed at the wrist using electromyography: a technique for measuring electrical activity in the nerves and muscles. Facebook have been buying up the major companies working in this area and recently released an impressive preview video of some forthcoming technology. If this can be miniaturised into wrist straps this technology could have significant implications for the future of immersive and augmented reality.

Facebook's Wrist-Worn Neural Finger Tracking Prototype

An alternative motion tracking approach is to leave the performer completely unencumbered and model the way in which we sense gestures: using the visual channel. Camera-based motion tracking typically involves the use of sophisticated machine vision techniques to estimate the position and motion of the hands in real-time. However, camera-based methods are far from a panacea: the approach depends on sometimes expensive external technology and the workspace is limited to the camera’s field of view. Furthermore, the approach is particularly susceptible to tracking errors when parts of the hands are obscured from view (or occluded). For example, a single camera will not be able to ‘see’ every finger when a fist posture is made, which makes estimating the position of joints extremely difficult. Camera-based methods also come with frame rate limitations (critical for music applications) and the algorithms that can identify hands within real-time moving images is processor intensive. However, the majority of these limitations are technical in nature and incredible progress has been made in recent years.

Until recently, camera-based tracking methods have required specialist technology with multiple cameras, or single cameras that incorporate an infra-red depth sensors. Facebook’s hand capture system using 124 (!!!) cameras is a good example of the former while the Leap Motion and Microsoft Asure Kinect are current examples of the latter.

Researchers at Carnage Mellon University made a huge breakthrough in 2019 when they published OpenPose, a set of algorithms that perform skeletal tracking using ordinary webcams like the ones found in most modern smartphones and laptops. The image processing uses deep learning, and requires serious computational power to run, much more than is typically available in a standard modern laptop. However, google have optimised this process with PoseNet, which runs real-time in a browser, see, for example, this neat little musical experiment called Body Synth. Google have since made further improvements and in 2020 released MediaPipe, which includes some impressively accurate and fast body and hand tracking algorithms.

OpenPose Example

MediaPipe Hands Example

MediaPipe Pose Example

MediaPipe is a substantial framework and incorporating it into another project is not a trivial process. This technical obstacle has restricted its uptake in artistic areas of expanded performance. Consequently, during my fellowship I’ve spent some time looking at the framework and creating a codebase on github and modified the Hand Tracking project to extract and stream the ‘landmarks’ (3D hand coordinates) over a network socket using Open Sound Control (OSC). OSC is a very common media format which is compatible with almost all programming environments used by artists, creative technologists and hobbyists.

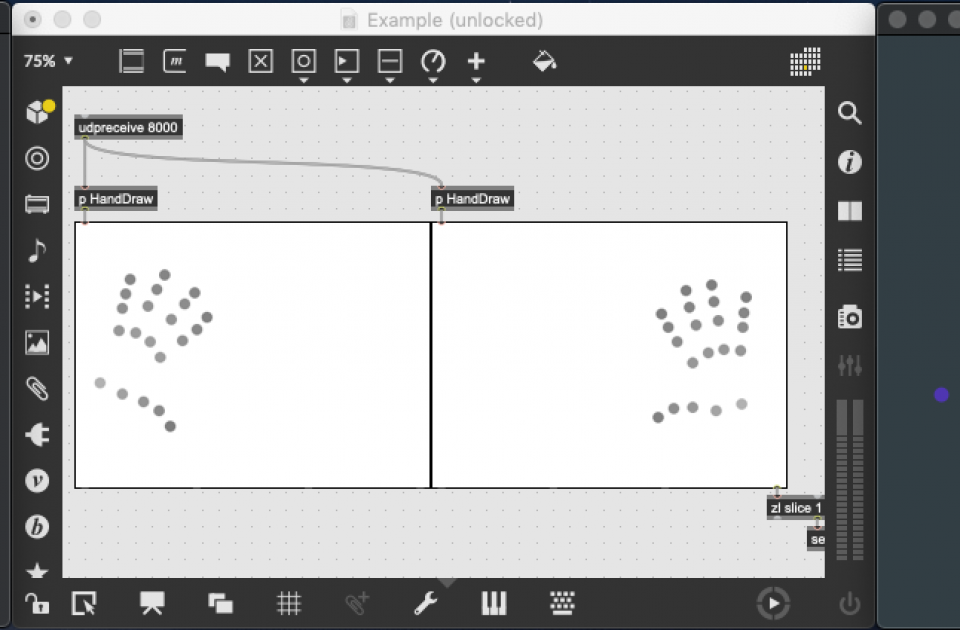

Image: mediapipe2osc.png

mediapipe2osc showing landmarks in MaxMSP and JUCE

If you are interested to take a look please visit the repo where you will find the code, build instructions and example projects for a number of different environments. I’ll be expanding the example projects and I’ll also make similar modifications to the Human Pose, Face Mesh, Hand and Holistic Tracking examples.

For further information and details please refer to the readme on the github repository: